Retrieval Augmented Generation

Retrieval-Augmented Generation (RAG) is the technique that powers most advanced enterprise AI agents today. It allows your AI assistant to deliver grounded, context-aware answers — not hallucinations or vague guesses.

At EONIQ, we use RAG extensively in almost all of our Agents — to read coding guidelines or product documentation for our Specification Agent or the relevant contracts for our Claims Checking Agent – RAG is almost everywhere. But what seems a little bit like “magic” on the surface is, in fact, a carefully tuned system with many moving parts and potential pitfalls.

In this post, we’ll break down:

- How RAG works (in plain terms)

- Where things often go wrong

- What design choices we make when building your agent

What Is RAG, Really?

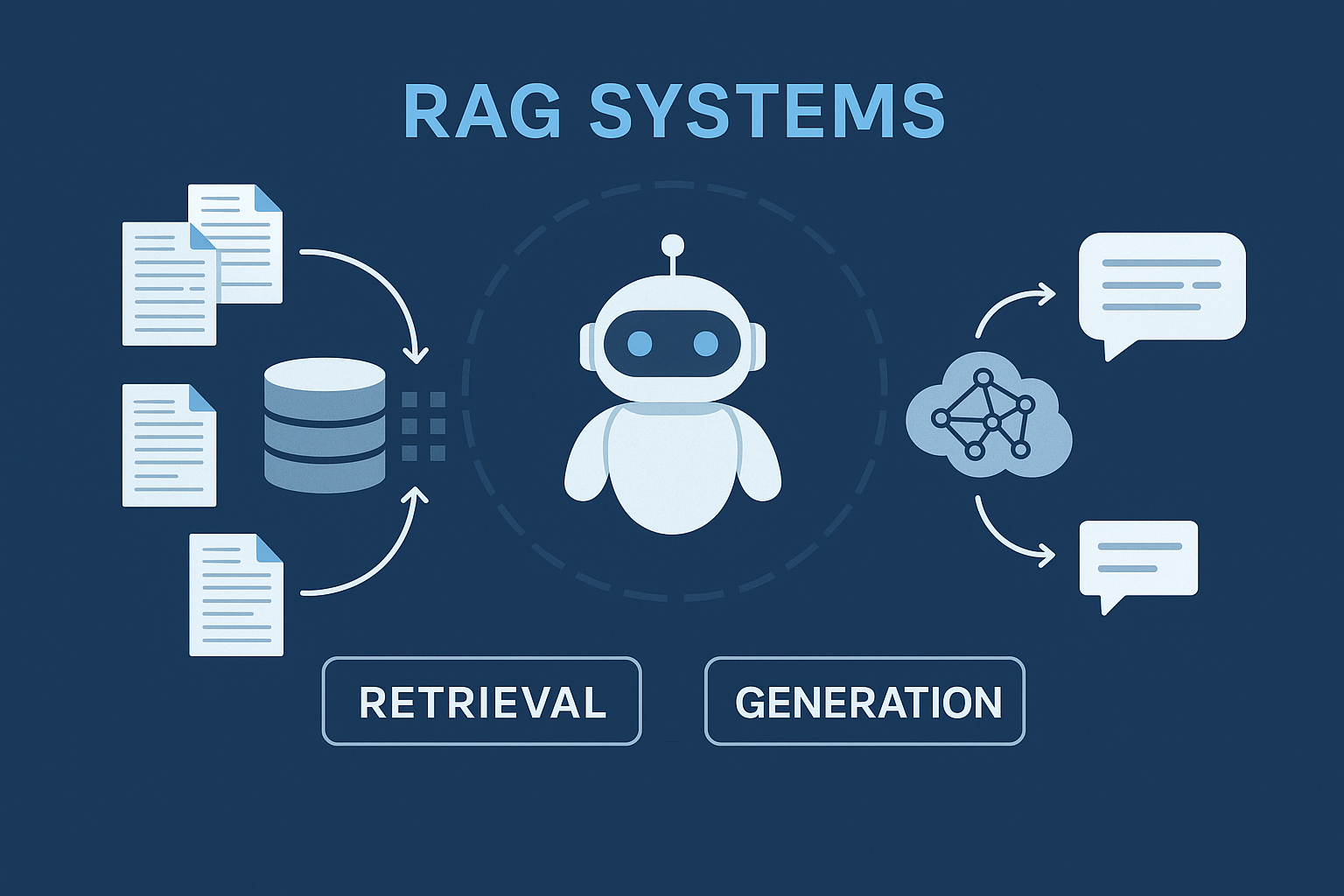

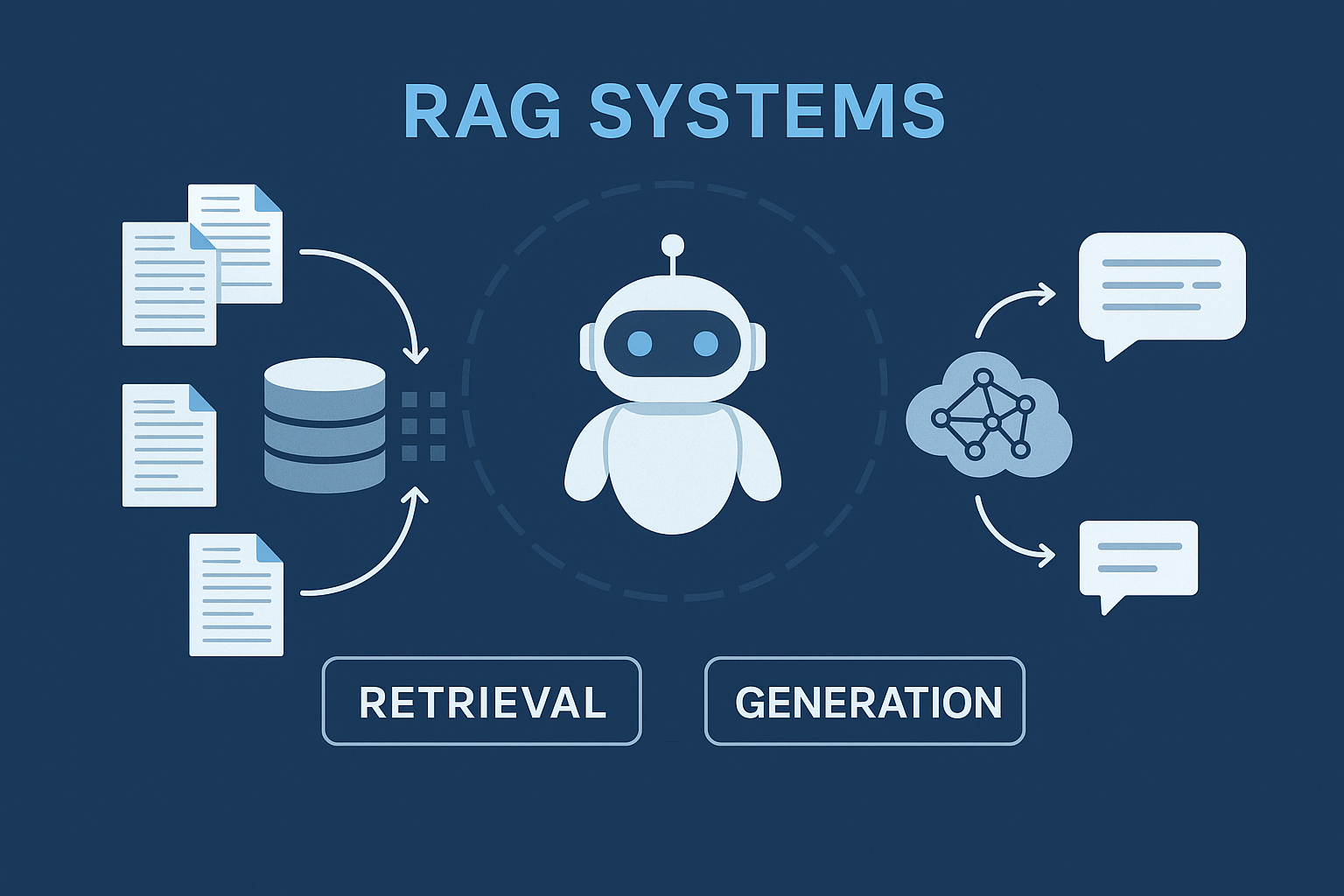

RAG stands for Retrieval-Augmented Generation. It’s a two-step architecture:

- Retrieval: The system finds relevant chunks of data or documents based on your query.

- Generation: A language model (like GPT) uses that retrieved data as context to answer your question or complete a task based on these document chunks.

This means your agent isn’t just using what it “knows” from training — it’s actively pulling from your documentation, tickets, PDFs, or databases before answering.

Core Components of a RAG System

Where RAG Systems Go Wrong

Despite its strengths, RAG can misfire — especially when systems are quickly built or poorly maintained. Here are common pitfalls:

- Bad source material: A great agent starts with great documents. If your PDFs are outdated, fragmented, or full of jargon, the agent won’t magically fix that.

- Wrong chunking strategy: Too much or too little context creates confusion for the model.

- Poorly chosen embedding models: Some work better in legal or technical domains; others excel at general language. Picking the wrong one = irrelevant retrievals.

- Naive similarity search: Sometimes the top match is semantically close — but not actually relevant to the user’s intent. That’s why re-ranking and filtering matter.

- Lack of traceability: Users don’t trust agents that “just say things.” Without visible sources or explanations, the output loses credibility.

How We Build Better RAG-Based Agents

We don’t just use RAG — we tune it for your domain, your documents, and your use case.

Here’s what we do differently:

Why It Matters for You

It is fundamentally important that the raw data is well read and accessible for your Agent, as it needs to understand your content deeply — and use it responsibly.

A well-designed RAG system means:

- Accurate, grounded answers

- Almost no hallucination

- Embedded domain knowledge

- Traceability and trust

A poorly designed one?

You get vague responses, frustration, and failed adoption.

Final Thought

RAG is not magic — it’s a system. One that requires careful engineering, clean content, and thoughtful tuning.

At EONIQ, we build custom AI Agents that don’t just work — they work on your knowledge, your rules, and your terms.